Benny Chor passed away on June 10, 2021. Luca Trevisan had a

blog post on the news.

We present a guest post on Benny Chor's life and works by Oded Goldreich. The post has many refs to papers. They will appear at the end with pointers to them.

Benny Chor was born on December 23rd 1956 and grew-up in Tel-Aviv, Israel. He studied Mathematics in the Hebrew University, receiving a B.Sc. in 1980 and an M.Sc. in 1981. He then switched to studying Computer Science at MIT, and graduated in 1985 with a PhD thesis titled

Two Issues in Public Key Cryptography -- RSA Bit Security and a New Knapsack Type System

which received an ACM Distinguished Dissertation award. After post-doctoral periods at MIT and Harvard, he took a faculty position at the Computer Science Department of the Technion (1987-2001), and then at Tel-Aviv University, where he served as the chairman of the department for two years. He died on June 10th 2021, from a terminal disease.

Although Benny was a very articulated and verbal person, I find it impossible to describe his personality in words. The point is that words cannot capture the experience of interacting with him, which was always sheer fun. Still, I guess I should say something personal as a close friend of his. So I will just mention that he lived happily with Metsada, whom he met in the summer of 1981, and that they lovingly raised three kids: Arnon, Omer and Aya. Actually, let me also mention his life-long close relationship with his brother, Kobi.

Focusing on his contributions to science, I will confine myself to the areas of cryptography and randomized computation. Still, let me mention that in the mid 1990s, Benny's research interests gradually shifted to computational biology, but I will not review his contributions to that area, since it is very remote from my own expertise.

In my opinion, Benny's most important contribution to cryptography is the fundamental

paper on Verifiable Secret Sharing [CGMA]. Loosely speaking, Verifiable Secret Sharing

is a method to share a secret so that any majority of share-holders may reconstruct the

secret, whereas no minority may do so, and still each share-holder can verify that the

share that they got is a valid one. This primitive had a tremendous impact on the

development of secure multi-party protocols, and almost all subsequent works in this

area utilize it.

Additional research milestones of Benny, in the area of Cryptography, include the proof

of the existence of a ``hard-core'' in the RSA and Rabin functions [BCS, ACGS], the

introduction and design of Private Information Retrieval (PIR) schemes [CGKS] (as well

as their computational counterparts [CG97]), and the introduction and design of

Tracing Traitor schemes [CFNP]. Each of these works deserves more than the foregoing

brief mention, yet I'm not even mentioning other important works like [CK].

Turning to the area of Randomized Computation, Benny's contributions span diverse areas

ranging from the manipulation of randomness to the use of randomness in complexity theory

and distributed computing. In particular, his work on weak sources of randomness [CG88]

identified min-entropy (of the source) as the key parameter for randomness extraction and

presented a simple two-source extractor. Distilling an aspect of [ACGS], his work on

pairwise-independent sampling [CG89] demonstrates the general applicability of this method. His works in distributed computing include a randomized Byzantine Agreement protocol [CC] that beats the deterministic bound without using unproven assumptions. Lastly, let me just mention a couple of his complexity-theoretic works: [BCGL, CCGHHRR].

Benny was a superb teacher and a devoted graduate student adviser. It is tempting to try

listing the numerous educational projects that he initiated, and his former students, but

these lists will be too long and the risk of a crucial omissions is too high. So let me

end by expressing the feeling of loss that is shared by many in our community,

which adds to my personal sorrow.

|

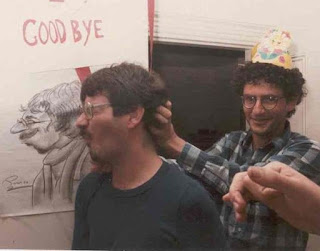

| Oded and Benny |

|

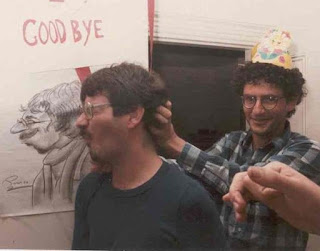

| Benny with his family's namesake (Chor = Bull) |

[ACGS] Werner Alexi, Benny Chor, Oded Goldreich, Claus-Peter Schnorr:

RSA and Rabin Functions: Certain Parts are as Hard as the Whole.

SIAM J. Comput. 17(2): 194-209 (1988)

LINK

[BCGL] Shai Ben-David, Benny Chor, Oded Goldreich, Michael Luby:

On the Theory of Average Case Complexity. J. Comput. Syst. Sci. 44(2): 193-219 (1992)

LINK

[BCS] Michael Ben-Or, Benny Chor, Adi Shamir: On the Cryptographic Security of Single RSA Bits STOC 1983: 421-430.

LINK

[CCGHHRR] Richard Chang, Benny Chor, Oded Goldreich, Juris Hartmanis, Johan Håstad, Desh Ranjan, Pankaj Rohatgi: The Random Oracle Hypothesis Is False.

J. Comput. Syst. Sci. 49(1): 24-39 (1994)

LINK

[CC] Benny Chor, Brian A. Coan:

A Simple and Efficient Randomized Byzantine Agreement Algorithm.

IEEE Trans. Software Eng. 11(6): 531-539 (1985)

LINK

[CFNP] Benny Chor, Amos Fiat, Moni Naor, Benny Pinkas:

Tracing traitors. IEEE Trans. Inf. Theory 46(3): 893-910 (2000)

LINK

[CG97] Benny Chor, Niv Gilboa:

Computationally Private Information Retrieval. STOC 1997: 304-313

LINK

[CG88] Benny Chor, Oded Goldreich:

Unbiased Bits from Sources of Weak Randomness and Probabilistic Communication Complexity.

SIAM J. Comput. 17(2): 230-261 (1988)

LINK

[CG89] Benny Chor, Oded Goldreich:

On the power of two-point based sampling. J. Complex. 5(1): 96-106 (1989)

LINK

[CGKS] Benny Chor, Oded Goldreich, Eyal Kushilevitz, Madhu Sudan:

Private Information Retrieval. J. ACM 45(6): 965-981 (1998)

LINK

[CGMA] Benny Chor, Shafi Goldwasser, Silvio Micali, Baruch Awerbuch:

Verifiable Secret Sharing and Achieving Simultaneity in the Presence of Faults.

[CK] Benny Chor, Eyal Kushilevitz:

A Zero-One Law for Boolean Privacy. STOC 1989: 62-72.

LINK