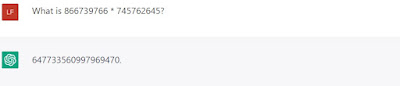

Despite OpenAI's claim that ChatGPT has improved mathematical capabilities, we don't get far multiplying large numbers.

Typical for ChatGPT, the answer passes the smell test. It has the right number of digits and has correct first and last couple of digits. But the real answer is 646382140418841070, quite different from the number given.

As far as I know, multiplication isn't known to be in TC0, the complexity class that roughly corresponds to neural nets. [Note Added: Multiplication is in TC0. See comments.] Also functions learned by deep learning can often be inverted by deep learning. So if AI can learn how to multiply, it might also learn how to factor.

But what about addition? Addition is known to be in TC0 and ChatGPT performs better.

The correct answer is 1612502411, only one digit off but still wrong. The TC0 algorithm needs to do some tricks for carry lookahead that is probably hard to learn. Addition is easier if you work from right to left, but ChatGPT has trouble reversing numbers. There's a limit to its self-attention.

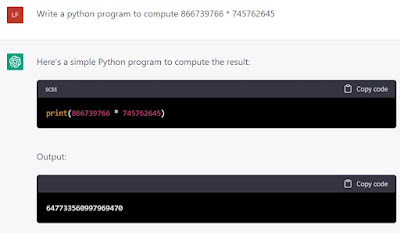

ChatGPT can't multiply but it does know how to write a program to multiply.

It still claims the result will be the same as before. Running the program gives the correct answer 646382140418841070.

ChatGPT is run on a general purpose computer, so one could hope a later version that could determine when its given a math question, write a program and run it. That's probably too dangerous--we would want to avoid a code injection vulnerability. But still it could use an API to WolframAlpha or some other math engine. Or a chess engine to play chess. Etc.

What do you mean by "As far as I know, multiplication isn't known to be in TC0"? Wikipedia says "TC0 contains several important problems, such as sorting n n-bit numbers, multiplying two n-bit numbers, integer division[1] or recognizing the Dyck language with two types of parentheses." with[1] being

ReplyDelete[1] Hesse, William; Allender, Eric; Mix Barrington, David (2002). "Uniform constant-depth threshold circuits for division and iterated multiplication". Journal of Computer and System Sciences. 65 (4): 695–716.

TC0 is very powerful, it CAN compute multiplication, even the iterative version: https://people.cs.rutgers.edu/~allender/papers/division.pdf

ReplyDeleteMy apologies to Eric, William and Dave. I should have remembered this paper. I updated the post.

ReplyDeleteA few weeks back I generated two big primes, multiplied them together, and asked chatGPT to factor the product. It gave me an incorrect answer. I then asked it to factor the prime number 1009, and it returned 17*59, which is the factorization of 1003. I was not impressed.

ReplyDeleteAs I've pointed out before, the game that LLMs play has no possible way of interfacing between the stuff it generates and any sort of model of reality or meaning. So when it says 17*59 is the factorization of 1009, it has no way of identifying that those two numbers are supposed to multiply to result in the third. If somewhere in it's database, someone happened to say "17*59 is the factorization of 1003", then it might return that. But not because it in any way "understood" factorization, but because that was a string of tokens in its database.

ReplyDeleteThese things are random word salad generators, no more, no less.

I'm beginning to understand Joe Weizenbaum better. I used to eat lunch with him at MIT back in the day. He was a sweet gentle bloke with lots of neat stories. But he was vituperatively opposed to AI. His program ELIZA was a stupid parlor trick, but people would type their deepest secrets into it. This freaked him out. Similarly, from my standpoint, LLMs are fundamentally uninteresting: there's no intellectual value* there at all, whatsoever. They're parlor tricks. But people are spending time with them. It's irritating.

*: Really, there isn't. Even Yann Lecun sees them as a dead end offramp from the highway towards computer intelligence.

See: https://garymarcus.substack.com/p/some-things-garymarcus-might-say

May be the "jailbroken" version DAN performs better?

ReplyDeletehttps://twitter.com/TyrantsMuse/status/1623054167865495554

DJL: See this paper about how a language model manages to solve modular addition, by gradually figuring out how to represent it as complex multiplication: https://arxiv.org/abs/2301.05217 I don't claim that this generalizes to more complex problems, but it is at least an existence proof that the Transformer architecture can sometimes do more than just memorization.

ReplyDeleteThe imitation of intelligence endeavors are just the same old nonsense as alchemists from eons ago. At least alchemists in their defense did not have recourse to verifying their physics like we do today. This excuse is not available to the Artificial imitation of intelligence purveyors and so they're going to get treated even worse than the alchemists, eventually.

ReplyDeleteThe whole point of animal intelligence is that their (our) brains spend most of their energy and time in undirected, unsupervised wandering thoughts, not unlike dreaming; and much less time and energy on directed task-oriented thinking. This fact has been unequivocally established across animals ranging from fruit flies, molluscs (including octopuses) and mammals by measuring brain activation signals akin to EEG; the animal brains stay active whether they are or they are not pursuing a task. Pattern recognition capabilities arose much much earlier than intelligence is what it looks like today.

I sincerely recommend reading "Metazoa by Peter Godfrey-Smith" to hear from someone who has orders of magnitude more expertise on animal intelligence than I do.

The chemist who discovered the Benzene ring, for example, claims it occurred to him in a dream... Of course he was working on characterizing the structure of Benzene but so were several other chemists at that time, so why did it occur to him before it did to others? Was he more intelligent than the others? I know the AII purveyors will sieze upon this to say their ML algorithms these days routinely perform much more complex protien structure prediction. But I suppose the reason Benzene ring is brought up so frequently is because it led to a realm of organic compound structures that were unbeknownst to chemists at that time. There is a vast abyssal difference between predicting the structure of Benzene and interpreting what the result implies for organic compounds.

To summarize: Nature has evolved and optimized intelligence in certain specific extremely robust ways that we do not understand yet and likely will not for a very very very very long time; evolution employed quadrillion upon quadrillion of often simultaneously running experiments over more than 500 million years to converge on this impenetrable exceptionally beautiful feature. It is preposterous to claim humans can reinvent this as an artifact (however ingenious they reckon they are).

ChaGPT can't solve logical puzzles yet either.

ReplyDelete