- It is amazing that the factoring and RSA problems are still hard (for classical computers), more than 40 used after they were proposed for cryptography. The same goes for the discrete-logarithm problem (in certain groups).

- It is not easy to find other hard mathematical problems! Code-based cryptography has been around about as long as factoring, but has been somewhat unpopular for reasons of efficiency. Lattice-based cryptosystems still seem to give the leading candidates.

- We need more (non-cryptographers) studying cryptographic assumptions. The attacks on SIKE involved deep mathematics; attacks on lattice problems may involve algorithmic ideas that cryptographers haven't thought of.

Computational Complexity and other fun stuff in math and computer science from Lance Fortnow and Bill Gasarch

Wednesday, August 31, 2022

The NIST Process for Post-Quantum Cryptography

Wednesday, August 24, 2022

Computational Intractability: A Guide to Algorithmic Lower Bounds. First draft available! Comments Welcome!

(This post is written by Erik Demaine, William Gasarch, and Mohammad Hajiaghayi)

In 1979 Garey and Johnson published the classic

Computers and Intractability: A Guide to NP-Completeness

There has been A LOT of work on lower bounds since then.

Topics that were unknown in 1979 include

Parameterized Complexity,

Lower bounds on approximation,

Other hardness assumptions (ETH, 3SUM-conjecture, APSP-conjecture, UGC, Others),

Online Algorithms,

Streaming Algorithms,

Polynomial Parity Arguments,

Parallelism, and

Many new problems have been shown complete or hard in NP, PSPACE, and other classes.

Hence a sequel is needed. While it is impossible for one book to encompass all, or even a large fraction, of the work since then, we are proud to announce a book that covers some of that material:

Computational Intractability: A Guide to Algorithmic Lower Bounds

by Erik Demaine, William Gasarch, and Mohammad Hajiaghayi. MIT Press. 2024

See HERE for a link to a first draft.

We welcome corrections, suggestions and comments on the book. Either leave a comment on this blog post or emailing us at hardness-book@mit.edu

Monday, August 22, 2022

20 Years of the Computational Complexity Weblog

I first posted on this blog twenty years ago today, still the oldest and longest running weblog in theoretical computer science, possibly in all of computer science. In those twenty years we've had nearly 3000 posts and over 23,000 comments and 10,000,000 page views. Bill Gasarch joined me officially as a co-blogger over 15 years ago on March 30, 2007.

We've seen major results in computational complexity but the biggest challenges remain, in particular major separations of complexity classes. We've also had a front row seat to a computing revolution with the growth of cloud and mobile computing, social networks connecting us for better or for worse, and incredible successes of machine learning. It's almost as though we've been handed an oracle that gives us much of the goodness of P = NP while leaving cryptography intact.

What will the next twenty years bring? We'll be watching, posting and tweeting. Hope you keep reading and commenting.

Thursday, August 18, 2022

Conference Modality

We have had an almost normal summer conference season, for some sense of normal. At one of those conferences I participated in an hybrid conversation about whether the conference should be in-person, virtual or hybrid the following year. Here are some general thoughts.

In-Person

The traditional conference format. People travel from near and far to a hotel, conference center or campus location. Talks given in large rooms, often in parallel. A reception, some social activities, participants gathering in small groups to go out for meals.

Positives: In-person maximizes interaction between participants. Being physically away from your home means you can focus your time on the conference and your fellow participants. This was more true before the mobile devices/laptops/internet days, but still most participants will spend more time on-site than on-line.

Negatives: Expensive--with registration, hotel and air fare, even a domestic participant could have to pay $2000 or up, higher for those traveling internationally. Visas can be hard to get. Some still feel unsafe in large groups. People often leave early, pity the last speakers. And don't forget the carbon footprint.

As countries declare war on other countries or states deny certain rights, there is a push against meetings in certain places. Note the disclaimer for next year's FCRC. You might upset some people if you have conferences at these locations (and others if you don't).

Virtual

Virtual conferences would never in the past have been taken seriously but Covid forced our hands.

Talks are streamed or pre-recorded. Some interaction with chats in talks, zoom get togethers or though a systems like virtual chair. Even if we had a perfect "metaverse" experience where we could get together as though we were in person, not being there in person means we wouldn't make it a priority.

The big advantages are costs are low, it's easy to attend talks, and no danger of spreading disease. Still a virtual conference can feel too much like just a collection of streamed and recorded talks.

Hybrid

So let's make the conference hybrid and have the best of both worlds. Alas, it doesn't work out that way. It's nearly impossible to have good interaction between in-person and virtual participants--basically you have to run two separate meetings. Do you allow virtual talks or require an author to show up in person.

How do you set prices? Lower prices for virtual increases assess but decreases in-person attendance. Participants (or their advisors) might opt to save expenses and attend virtually instead of in-person, reducing the networking opportunities for everyone.

Most conferences tend to take the hybrid route to avoid the complaints if one went fully in-person or virtual, but hybrid just pretty much guarantees a mediocre experience for all.

Opinion

My suggestion is some years run the conference virtually and others in hybrid. We already have too many conferences, a byproduct of our field using conferences as the primary publication venue. I suggest following conferences like the International Congress of Mathematicians or the Game Theory World Congress, held every four years. If the main conference of a field is held every four years, researchers, particularly senior researchers, make a bigger effort to be there. You can have the virtual meetings the other years so researchers, particularly students, can continue to present their work.

No easy solutions and CS conferences have not worked well for years. Maybe the struggle to define future conferences will allow us to focus more on the connecting researchers than just "journals that meet in a hotel".

Monday, August 15, 2022

A non-controversial question about the Documents Donald Trump had in his house

Monday, August 08, 2022

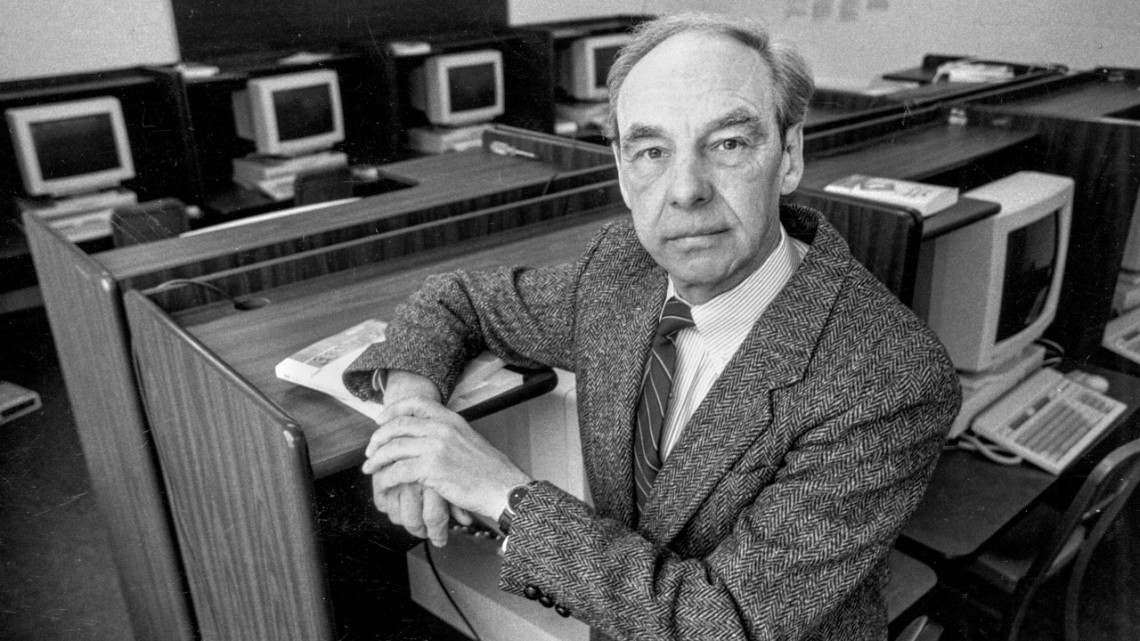

The Godfather of Complexity

|

| Juris Hartmanis 1928-2022 |

On Friday, July 29th, I was in the immigration line at an airport in Mexico. My phone rang with Bill Gasarch on the Caller ID but starting vacation I declined the call. The voicemail gave me the sad news that Juris Hartmanis, the person who founded computational complexity and brought me into it passed away earlier that day. I messaged Bill and told him to write an obit and I'd follow with something personal when I returned.

|

| Hartmanis and Stearns in 1963 |

|

| Juris Hartmanis (center) being toasted by Janos Simon |

- "We all know P is different than NP. We just don't know how to prove it." - Still true.

- "I only make mistakes in the last five minutes of the class." - Sometimes he made a mistake with ten minutes left but only admit it in the last five minutes.

- "Primality is a problem not yet know to be in P but is hanging on by its fingernails with its grip continuing to loosen each day." - Juris Hartmanis said this in 1986, with primality hanging on for another 16 years.

- In 1981 Hartmanis wrote a personal article for the Annals of History of Computing describing the birth of computational complexity and his role in it.

- A CACM interview with Hartmanis gives a personal story of his path from Latvia to Kansas City to Pasadena to Schenectady to Ithaca and DC.

- Obits by Cornell, Ryan Williams and Dick Lipton and Ken Regan.

- My old blog posts celebrating Hartmanis' 90th birthday and the 50th anniversary of Hartmanis-Stearns,

- And the many retrospectives, remembrances, special issues and conferences to come.

Sunday, August 07, 2022

The Held Prize for comb. opt. AND Disc Opt AND Alg AND Complexity theory AND related parts of CS.

Dan Spielman asked me to blog about the Held Prize. I first present what he send me, and then have some thoughts.

FROM DAN:

--------------------------------------------------------------------------------------------------

Nominations are now being accepted for the National Academy of Sciences’ 2023 Michael and Sheila Held Prize. The Held Prize honors outstanding, innovative, creative, and influential research in the areas of combinatorial and discrete optimization, or related parts of computer science, such as the design and analysis of algorithms and complexity theory. This $100,000 prize is intended to recognize recent work (defined as published within the last eight years). Additional information, including past recipients, eligibility requirements, and more, can be found at here.

All nominations must be submitted online. Unless otherwise stated, the following materials must be submitted:

A letter from the nominator describing the candidate's work and why he or she should be selected for the award. No more than three (3) pages.

Curriculum vitae. No more than two (2) pages (similar to CVs included with NSF proposals).

Bibliography listing no more than twelve (12) of the nominee's most significant publications.

Suggested citation. A 50-word summary stating why the nominee should be considered for this award. (Citation

examples)

Two letters of support. Support letters must be written by individuals from institutions outside both the

nominator's and the nominee’s institution. Up to three letters of support are accepted.

Nominations will be accepted through Monday, October 3, 2022. Please help spread the word that the nomination process is underway.

----------------------------------------------------------------------------------------------------

BILL COMMENTS

1) The scope seems rather broad (Dan confirmed this in private email) in that its Comb Opt AND Discrete Opt OR related fields like algorithms and complexity theory.

2) The research has to be Outstanding AND Innovative AND creative AND influential. That seems hard to do :-( If they made it an OR instead of an AND I may ask someone to nominate me for my Muffin Work. It does use 0-1 programming!

3) The past winners are, of course, very impressive. But there is one I want to point out to emphasize that the scope is broad: Amit Sahai won in 2022, and here is what the webpage says about it:

For a leading role in development of cryptographic Software Obfuscation and its applications, starting from initial conception of "Indistinguishability Obfuscation" and culminating in new constructions based upon well-founded cryptographic assumptions. These breakthroughs highlight how computational complexity can enable secrecy while computing in insecure environments.

4) Comb Opt and Discrete Opt seem to be Operations Research. So this inspires the following question:

What are the similarities and differences between Operations Research and Research on Algorithms?

I tend to think of Operations Research as being more tied to the real world- but is that true?

5) Not enough 2-letter combinations for what you want to say: I had to use the term Operations Research instead of the abbreviation OR since I was using OR for or. Oh well.